How Artificial is Human Intelligence -- and Humanity? Consideration of AI Safety versus Safety from Human Artifice

Introduction

Much is now made of the potential dangers of artificial intelligence and the urgent need for its regulation (Yoshua Bengio, et al, , "Large-scale Risks from Upcoming, Powerful AI Systems": managing AI risks in an era of rapid progress, Global Research, 25 October 2023). Media have focused on the recent assertion by Henry Kissinger in the light of his involvement in the World Economic Forum (AI Will Replace Humans within 5 Years, Slay, 28 October 2023). The more dramatic argument is presented by Geoffrey Hinton (John Davidson, Godfather of AI stands by extinction warning, Financial Review, 1 November 2023).

Such are purported to be the dangerous consequences for the world that the UK government has urgently hosted an AI Safety Summit of leaders in the field at the iconic location of computer innovation in response to the threat of World War II (BBC, 28 October 2023). The Summit gave rise to the so-called Bletchley Declaration, reinforced by a statement by the UN Secretary-General (Statement at the UK AI Safety Summit, United Nations, 2 November 2023). It is unclear whether any form of AI was used there to enhance the quality of discourse typical of such events (Use of ChatGPT to Clarify Possibility of Dialogue of Higher Quality, 2023).

Failure to enhance the quality of interaction at such events raises the question as to whether they could be appropriately caricatured as "large language models" of an outmoded kind -- and dangerous as such -- with their own variant of the "hallucination" deprecated as a characteristic of AIs.

At the same time, the world is confronted by the unrestrained response by Israel to the attack from Gaza, and the many human casualties which are expected to result. However it is perceived, this is clearly the consequence of the application of human intelligence. In the case of the Israelis, their relative intelligence is widely recognized, if not a matter of ethnic pride (Nelly Lalany, Ashkenazi Jews rank smartest in world: studies show descendants of Jews from Medieval Germany, throughout Europe have IQ 20% higher than global average, Ynet News, 23 July 2011; Bret Stephens, The Secrets of Jewish Genius, The New York Times, 27 December 2019; Sander L. Gilman, Are Jews Smarter Than Everyone Else? Mens Sana Monographs, 6, 2008, 1; Kiryn Haslinger, A Jewish Gene for Intelligence? Scientific American, 1 October 2005).

The concern in what follows is how to distinguish, if that is possible, between the much publicized dangers of AI and those deriving from "human artifice". The nature of human artifice, and its dangerous consequences, has become confused by the focus on artificial intelligence. It is however clear that many global crises are the consequences of human artifice -- in the absence of any use of AI.

The Anthropocene Era might well be explored in such terms. AI as a safety concern is a latecomer to the scene -- itself a consequence of human artifice. The currently acclaimed urgency of the crisis posed by AI can even be seen as displacing the urgency previously accorded to climate change. The apparent shift of urgency to a strategic focus on the dangers of AI could even be seen as a convenience -- in the absence of viable responses to climate change.

Given the current multiplicity of global crises -- a polycrisis -- the seemingly absurd consequences of human artifice merit particular concern by comparison with those potentially to be engendered by artificial intelligence. The nature of intelligence has however long been a matter of debate, especially in the light of the search for extraterrestrial intelligence. Having engendered so many crises, it might even be provocatively asked whether "human intelligence", as acclaimed, will be appreciated as such by the future (Quest for Intelligent Life on Earth -- from a Future Perspective, 2023). However it might also be asked -- speculatively -- whether humanity is endowed with some form of "indwelling" intelligence, relevant to crisis response, elusive though it may be (Implication of Indwelling Intelligence in Global Confidence-building, 2012).

The particular concern in what follows is whether what is appreciated as "human intelligence" has progressively acquired characteristics which might be deprecated as "artificial". How artificial has "humanity" become? What indeed is the distinction between "artificial" and "artifice"? How artificial is one's intelligence as a human being? The question has been variously discussed (Arun Malhotr, The artificiality of human intelligence, The Daily Guardian, 27 July 2020; Sybille Krämer, The Artificiality of the Human Mind: A Reflection on Natural and Artificial Intelligence, 2022).

How is the distinction to be made between the "artificiality" of an agent of an organization representing humanity (or an expert representing a domain of expertise) and the "humanity" of that agent or discipline? Most obviously the question applies to those gathered at the AI Safety Summit, or to those charged with regulating AI in the future.

Some such progressive artificiality is to be expected as a form of human adaptation to an increasingly artificial environment and the skills required to survive within it. The adaptation to AI might well be understood in terms of the acquisition of features which are characteristic of AI -- and of its dangers to humanity. Dangers held to be associated with the technology of "artificial intelligence" then merit exploration as deriving from the unacknowledged projection of the artificiality increasingly associated with human intelligence.

This projection could be seen as an instance of misplaced concreteness -- the fallacy of reification. The dangers perceived in the technology are then to be understood as driven -- to some degree -- by questionable patterns of thinking. Ironically this understanding might be supported by insights into cognitive development from disciplines upholding perspectives which are the antithesis of those driving the development of AI technology. It is in this sense that the surprisingly extensive literature on AI from a variety of religious perspectives merits attention -- especially in the light of the challenge to ethics and morality now highlighted with respect to AI development.

The following exploration includes presentation of the challenge to ChatGPT as exemplifying an "interested party". That exchange helps to clarify the distinction which would seem to be of value at this time.

Dangerous development of AI?

Safety of AI? As implied above, it is appropriate to ask how "artificial" was the collective intelligence evident at the AI Safety Summit -- and how this might be best understood in the light of warnings immediately before the event (Sunak’s global AI safety summit risks achieving very little, warns tech boss, The Guardian, 20 October 2023; Editorial: Why the UK-led global AI summit is missing the point (Nature, 31 October 2023)).

Following the questionable pattern of many international declarations of the past, attention will necessarily focus on the Bletchley Declaration signed at the AI Safety Summit:

- Alex McFarland: What is the Bletchley Declaration Signed by 28 Countries? (Unite.ai, 3 November 2023)

- Chris Stokel-Walker: UK AI summit: Countries agree declaration on frontier AI risks (New Scientist, 1 November 2023; UK AI summit: US-led AI pledge threatens to overshadow Bletchley Park, New Scientist, 2 November 2023)

- Matthew Sparkes: UK AI summit is a 'photo opportunity' not an open debate, critics say (New Scientist, 1 November 2023)

- Dan Milmo: Five takeaways from UK’s AI safety summit at Bletchley Park (The Guardian, 2 November 2023)

- Kiran Stacey: ‘It’s not clear we can control it’: what they said at the Bletchley Park AI summit (The Guardian, 12 November 2023).

As noted by Matthew Sparkes:

The reality is that technology – just as it has always done – is outpacing legislation. And if the world’s law-makers at least got up to speed on the latest developments in AI at Bletchley Park this week, it is hard to imagine they won’t need a refresher course by the time they meet again, with the face of AI having transformed once more. While summits might offer photo opportunities and the chance for politicians to rub shoulders with the likes of Elon Musk, no amount of gatherings can solve the problem of innovation outpacing legislation. (What did the UK's AI Safety Summit actually achieve? (New Scientist, 2 November 2023)

Sparkes concludes with the provocative speculation: Perhaps this meeting could have been a ChatGPT-generated email, and saved the carbon expenditure of jetting everyone in.

Relevance to global governance? Prior to the Bletchley summit, the UN Secretary-General announced the creation of a new High-level Advisory Body on Artificial Intelligence, with the following focus:

- The Global AI Imperative: Globally coordinated AI governance is the only way to harness AI for humanity, while addressing its risks and uncertainties, as AI-related applications, algorithms, computing capacity and expertise become more widespread internationally.

- The UN's Response: To foster a globally inclusive approach, the UN Secretary-General is convening a multi-stakeholder High-level Advisory Body on AI to undertake analysis and advance recommendations for the international governance of AI.

- Calling for Interdisciplinary Expertise: Bringing together up to 38 experts in relevant disciplines from around the world, the Body will offer diverse perspectives and options on how AI can be governed for the common good, aligning internationally interoperable governance with human rights and the Sustainable Development Goals.

Curiously the comments prior to the declaration (and thereafter) tend to be vague in nature, especially with regard to both the risks and opportunities of AI. Emphasis is on the "tremendous" opportunities and the enthusiasm of techno-optimists, matched by the suggestions of the dangers to humanity by some -- readily to be recognized as fear-mongering typical of other crises.

Curiously missing is any emphasis on how AI might indeed enhance global governance, including the management of those risks and opportunities -- in the light of past inadequacies in response to crises, despite access to the highest relevant expertise. Specifically, it might be asked why no use was apparently made of AI to enhance the AI Safety Summit and the formulation of the Bletchley Declaration. Somewhat ironically, there is no indication of how AI will contribute to the working of the UN High-level Advisory Body on Artificial Intelligence -- or undermine it as a feature of the risks so strongly anticipated.

How might AI enhance interactions at the UN Summit of the Future planned for 2024 -- in the light of the UN Secretary-General's vision for the future of global cooperation in the form of a report titled Our Common Agenda (2021)?

Challenges for the UN system? It is appropriate to note that the UN system has been endowed with an International Computing Centre (UNICC) since 1971. It was created as an inter-organization facility to provide electronic data processing services. It was established by a Memorandum of Agreement among the United Nations (UN), the United Nations Development Programme (UNDP) and the World Health Organization (WHO), pursuant to resolution 2741 (XXV) of the United Nations General Assembly.

There has been relatively little trace of the involvement of the UNICC in AI, despite its participation in the AI for Good Global Summit series organized by the ITU:

- Artificial Intelligence for Social Good (UNICC, 13 August, 2018)

- AI for Health – UNICC’s Digital Trust Presentation at ITU (UNICC, 11 February 2019)

- Accelerating Sustainable Development with Hyperautomation (UNICC, 18 December, 2020)

- A Strengthened Threat Intelligence Posture for the UN Family (UNICC, 14 December, 2020)

Of potential relevance is the lack of recognition of the long-standing challenge to the relationship among UN agencies, and with variously associated bodies, as documented at the time of the creation of UNICC at the International Symposium on the Documentation of the United Nations (Acquisition and Organization of International Documentation, 1972). The introductory report to the Second World Symposium on International Documentation (1980) was republished under the title Societal Learning and the Erosion of Collective Memory (1980). It is appropriate to ask whether such challenges -- typical of problematic inter-agency dynamics at all levels of governance -- will be effectively addressed through the UN's new advisory body, whether or not it is assisted by AI.

"Dark AI" and globally organized crime? Missing from the current enthusiasm for institutional safeguards and regulation is any recognition of how AI may be exploited by constituencies skilled in the evasion of regulatory nets -- most obviously organized crime. This naivety is evident in the light of the development of the Dark Web, thereby suggesting the emergence of a "Dark AI":

- Margarita Del Val: Dark AI tools: How profitable are they in the underground ecosystem? (Outpost24, 15 August 2023)

- Tim Keary: The Future of Dark AI Tools: What to Expect Next? (Techopedia, 1 September 2023)

- Mark Minevich: How To Combat The Dark Side Of AI (Forbes, 28 February 2020)

- Daniel Iwugo: What is Dark AI? (Cybersecurity Education Initiative)

Such possibilities are only evident in the summit preoccupation with cybersecurity -- ironically matched by massive investment in cyberwarfare technology by those preoccupied with AI safety.

Given the role of intelligence in the "Great Game" of the 19th century, there is a case for a speculative reformulation in which the new variant is to be recognized as the "game" of humanity with AI, rather than as previously framed (Playing the Great Game with Intelligence: Authority versus the People, 2013).

Artificiality of human intelligence?

Arguably it is too readily assumed that "human intelligence" is the antithesis of "artificial intelligence". In the spirit of contrarian analysis, there is a case for questioning this assumption (Contrarian Analysis as a Means of Avoiding Intelligence Failure, 2023).

Question to ChatGPT: Could you comment on the significance of a question to humans: how artificial is your intelligence -- or on how that question might be formulated in a more fruitful manner [Responses by ChatGPT 3.5 are presented below in shaded text]

The question "how artificial is your intelligence" is significant to humans for several reasons. It reflects the ongoing curiosity and concern about the capabilities and limitations of artificial intelligence (AI) systems like mine. Here are some aspects to consider:

To formulate this question in a more fruitful manner, one could consider the following approaches:

In summary, the question about the artificial nature of AI is significant, but formulating it in a more precise and context-specific manner can lead to more informative and constructive discussions about the capabilities and limitations of AI systems. |

Question to ChatGPT: You have focused on the implications for AI, whereas my formulation sought to clarify how artificial human intelligence can be recognized to be. The emphasis is on what would justify recognition of some aspects of human intelligence as appropriately understood to be "artificial".

I see, you're interested in understanding how some aspects of human intelligence can be recognized as "artificial." This perspective addresses the idea that certain human behaviors or cognitive functions might appear artificial or non-genuine. To explore this further, you can consider the following points:

In essence, recognizing aspects of human intelligence as "artificial" often comes down to assessing authenticity, genuineness, and the complexity of human behaviors and responses. It involves considering the context, emotional depth, adaptability, and the presence of underlying intent. It's worth noting that the line between genuine and artificial behavior can be subjective and context-dependent, making it a complex and nuanced aspect of human psychology and sociology. |

Authenticity of human intelligence?

Question to ChatGPT: I question whether authentic is the appropriate contrast to artificial. Is it not possible to be artificially authentic and authentically artificial. Could a human claim to be intelligent when the intelligence exhibited is artificial? Is a proportion of human intelligence more appropriately described as artificial?

You bring up a thought-provoking perspective, highlighting the complexity of the contrast between "authentic" and "artificial" intelligence. It is indeed possible for certain aspects of human intelligence to exhibit characteristics that might be described as "artificial," and there can be instances where something appears "artificially authentic" or "authentically artificial."

The distinction between authentic and artificial intelligence in humans is indeed nuanced, and it highlights the malleability and adaptability of human cognition. Some aspects of human intelligence are influenced by societal norms, cultural constructs, external influences, or learned behaviors, which can be considered "artificial" in the sense that they are not inherently or universally human. Moreover, human intelligence is a diverse and multifaceted construct, encompassing various cognitive abilities and behaviors. While a substantial portion of human intelligence is innate and deeply rooted in our biology and evolution, another part is shaped by learned behaviors and external influences. This "artificial" aspect of human intelligence arises from the capacity to adapt, learn, and integrate external information and cultural norms. In summary, it is possible to identify aspects of human intelligence that could be described as artificial, especially when they are influenced or shaped by external factors or when they intentionally mimic specific behaviors. The distinction between authentic and artificial intelligence in humans is indeed a complex and multifaceted issue, reflecting the flexibility and adaptability of human cognition. |

Test for "artificiality" complementary to the Turing Test for "humanity"

Question to ChatGPT: Given efforts to develop a human dimension to AI, is it not appropriate to clarify any tendency for human intelligence to develop artificial characteristics. Whereas there is a Turing Test for the former, is there a case for a corresponding test for the latter?

The idea of clarifying the tendency for human intelligence to develop artificial characteristics is an intriguing one. As AI technologies advance and become increasingly integrated into various aspects of our lives, understanding the extent to which human intelligence might adopt or adapt to artificial characteristics is an important consideration. While there isn't a widely recognized "Turing Test" equivalent for assessing the development of artificial characteristics in human intelligence, there are several related concepts and areas of research to explore:

Developing a specific test to assess the extent to which human intelligence acquires artificial characteristics is a challenging task due to the complexity and diversity of human cognition. It would require careful consideration of the criteria, context, and ethical implications. However, ongoing research in human-computer interaction, ethics, and the integration of AI into various domains is working towards understanding these dynamics. In summary, while there isn't a standardized test like the Turing Test for assessing artificial characteristics in human intelligence, there are various research avenues and interdisciplinary efforts to explore and understand how AI influences and potentially shapes human intelligence in ways that might exhibit artificial characteristics. This exploration is crucial as AI technologies continue to evolve and become more deeply integrated into our lives. |

Further clarification was sought by presenting the above response from ChatGPT 3.5 to ChatGPT 4.0 for possible refinement. With respect to any supplement, a further consideration was that a key to any such test relates to the challenge of deepfakes. Could a human be distinguished from such a fake, or does the fakery exemplify the extent to which a human may be held to be artificial to some significant degree?

The concept of human intelligence acquiring artificial characteristics in the age of advanced AI is indeed a thought-provoking topic. As we intertwine our lives and decision-making processes with AI, the delineation between human and artificial cognition becomes increasingly blurred. There isn't a standardized assessment akin to the Turing Test for gauging the artificiality within human intelligence, but the emergence of deepfakes presents a unique lens through which to examine this phenomenon. Refined response: The integration of AI into human cognition and decision-making raises the question of whether, and to what extent, human intelligence may begin to mirror artificial characteristics. Currently, there is no equivalent to the Turing Test specifically designed to measure the 'artificiality' of human intelligence. However, the interplay between humans and AI across various domains provides fertile ground for this inquiry:

Supplemental thoughts on deepfakes: Deepfakes present a compelling case study for the potential artificiality of human intelligence. They challenge our ability to discern genuine human expressions from AI-synthesized imitations. This technology not only questions the authenticity of digital content but also serves as a metaphor for the artificial characteristics that may be developing within human intelligence itself.

In essence, the rise of deepfakes could prompt a deeper exploration of how human intelligence adapts and responds to the capabilities of AI. As we become more adept at creating and interacting with sophisticated AI representations, the characteristics that we traditionally view as uniquely human may evolve, and our understanding of what constitutes 'artificiality' within human intelligence will need to be reexamined. This ongoing dialogue between human and machine intelligence, characterized by both cooperation and competition, will continue to shape our cognitive landscape. |

Human augmentation and acquisition of artificial characteristics?

Question to ChatGPT: Could development of human augmentation technologies, such as brain-computer interfaces, to enhance human cognitive abilities (by integrating AI) be understood as enhancing artificial characteristics?

Yes, the development of human augmentation technologies, such as brain-computer interfaces (BCIs) that integrate AI to enhance human cognitive abilities, can be understood as enhancing artificial characteristics within the realm of human intelligence. Here's why:

While the development of BCIs and similar technologies offers the potential for significant cognitive enhancements and benefits, it also sparks debates about ethical, societal, and philosophical aspects. The question of whether the introduction of artificial elements into human cognition enhances or alters the nature of human intelligence is a complex and ongoing discussion. Some view this as a form of "augmented intelligence," where the human and artificial elements combine to create a more capable and adaptable intelligence. Others may argue that it introduces "artificial" characteristics or behaviors into human thought processes, as AI-driven enhancements can be different in nature and origin from traditional human cognitive capacities. In essence, the integration of AI into human cognition through augmentation technologies blurs the lines between human and artificial intelligence, raising fundamental questions about the nature and boundaries of human intelligence in the context of a rapidly evolving technological landscape. |

Relative "inhumanity" of humans compared to AI in the future?

Question to ChatGPT: Is there then the possibility that development of AI may render AI "more human" than some humans whose proportion of intelligence could be more appropriately recognized as artificial to a high degree -- if not increasingly so

The idea that AI could become "more human" than some humans, or that a portion of human intelligence might be recognized as increasingly artificial, is a thought-provoking concept and a subject of ongoing debate and speculation. Here are some key considerations:

In essence, while AI can surpass human performance in specific tasks, it's important to remember that the comparison between AI and human intelligence is not entirely straightforward. AI lacks many aspects of human intelligence, such as consciousness, empathy, and the ability to truly understand and navigate the nuances of the human experience. Human intelligence is shaped by a combination of innate capacities, cultural and social influences, and personal experiences, making it a unique and multifaceted phenomenon. The integration of AI into human life presents opportunities for collaboration and augmentation, but it also requires careful consideration of the implications for human cognition, ethics, and society. The question of whether AI can become "more human" than some humans remains a complex and evolving area of exploration. |

Human intelligence as characterized by "muddling through"?

Question to ChatGPT: I wonder whether what is conventionally framed as "human intelligence" -- by humans and by AI -- is primarily characterized by "muddling through", framed positively as creativity, although a more problematic framing might be appropriate

The concept of "muddling through" or navigating through complex, ambiguous situations and problems is indeed a significant aspect of human intelligence. It encompasses the ability to adapt, think creatively, and find solutions even in uncertain or challenging circumstances. This capacity for creative problem-solving is a defining feature of human intelligence. However, the term "muddling through" can sometimes be seen as somewhat dismissive or underestimate the depth of human intelligence. While it suggests a certain degree of trial-and-error and improvisation, these processes are often guided by a complex interplay of cognitive abilities, such as critical thinking, pattern recognition, emotional intelligence, and the application of accumulated knowledge and experiences. This multifaceted approach to problem-solving contributes to the richness and diversity of human intelligence. Framing this aspect of human intelligence as "creativity" emphasizes its positive and constructive nature. Creativity is a fundamental part of human intelligence and is responsible for advancements in science, technology, art, and numerous other domains. It allows humans to find innovative solutions, develop new ideas, and adapt to changing circumstances. However, it's worth acknowledging that "muddling through" or creative problem-solving can have its challenges and limitations. It may involve uncertainty and setbacks, and not all outcomes are successful. In some contexts, it can be seen as a less structured or systematic approach compared to the precision and predictability that AI systems can offer. In summary, "muddling through" and creativity are integral components of human intelligence. While they represent the ability to navigate complex situations and adapt to changing conditions, it's essential to appreciate the complexity and depth of these processes, which involve a combination of cognitive, emotional, and experiential elements. Framing this aspect of human intelligence positively as "creativity" is valid, but it's also crucial to recognize the challenges and nuances associated with this approach. |

Being "only human" as primary characteristic of "humanity"?

Question to ChatGPT: The phrase "only human" is often used to characterize and excuse problematic and irrational human behaviour. This conversation has not clarified the distinction from "human error", "muddling through", and "creativity" -- as they relate to "human augmentation" and any understanding of becoming "more human". Could you comment further

The distinctions between "only human," "human error," "muddling through," "creativity," and how they relate to "human augmentation" and the concept of becoming "more human" are indeed nuanced and interconnected. Let's explore these concepts further:

The challenge lies in recognizing that these concepts are not mutually exclusive; they intersect and coexist in complex ways. "Only human" acknowledges our limitations, while "muddling through" and "creativity" showcase our adaptability and ingenuity. "Human error" is a manifestation of our imperfections, but it can also lead to creative problem-solving and learning. When we introduce human augmentation technologies, we aim to leverage artificial elements to enhance our cognitive abilities. While this can improve specific aspects of decision-making and information processing, it doesn't eliminate human tendencies like errors or the need to muddle through complex situations. Instead, it complements and extends our capabilities. The distinction between these concepts and their interplay can vary based on context and perspective. In essence, human augmentation allows us to enhance certain aspects of our cognitive abilities while recognizing that "only human" characteristics, including errors and creative problem-solving, remain intrinsic to our nature. The goal is not to replace these human attributes but to empower individuals to make more informed and effective decisions while maintaining their unique human qualities. |

Dangerous enhancement of cognitive abilities?

Question to ChatGPT: These useful distinctions do not help to clarify the manner in which human augmentation technologies, leveraging artificial elements to enhance cognitive abilities, may have dangerous consequences. Clearly there is the possibility that "human augmentation" may conflict with becoming "more human" independently of AI. There is even a danger of conflating "human augmentation" with becoming "more human" -- obscuring the potential significance of the latter

You raise a valid point about the potential dangers and conflicts that can arise with the development and implementation of human augmentation technologies. It is essential to distinguish between human augmentation and the concept of becoming "more human," as they represent distinct ideas and can indeed have different consequences and implications:

The potential danger lies in conflating these two concepts. If human augmentation is pursued without a careful consideration of how it aligns with or potentially conflicts with becoming "more human," it could result in a dehumanizing or dehumanized approach. Here are some key considerations:

In summary, while human augmentation technologies can offer significant benefits, there is indeed a danger in conflating them with the concept of becoming "more human". Careful consideration of the ethical, societal, and philosophical dimensions is essential to ensure that these technologies enhance rather than undermine our humanity. Balancing the pursuit of augmentation with a commitment to preserving core human qualities is a complex challenge in the era of AI and advanced technology. |

Human augmentaion versus Psychosocial development?

Question to ChatGPT: Preoccupation with human augmentation as understood from a technological perspective would seem to contrast fundamentally with understandings of psychosocial development from the perspective of psychoanalysis and the many schools of meditation otherwise concerned with subtler dimensions of awareness. Is the former to be understood as artificial in contrast with the latter

The preoccupation with human augmentation from a technological perspective does indeed contrast fundamentally with understandings of psychosocial development from the perspective of psychoanalysis and other schools of thought concerned with subtler dimensions of awareness. These two approaches represent different paradigms and worldviews, each with its own set of values, assumptions, and implications.

The distinction between these two perspectives is not necessarily a matter of one being "artificial" and the other "authentic." Instead, it reflects different lenses through which we view and engage with the human experience. Some individuals and scholars may choose to embrace both perspectives, recognizing that they can complement and enrich each other, but others may see them as fundamentally different worldviews. Ultimately, the choice between these perspectives can depend on individual values, goals, and beliefs. Some may prioritize technological advancement as a means to enhance human capabilities, while others may value inner exploration and psychological growth. The key is to acknowledge the richness and diversity of human experience and the various ways in which we seek to understand and enhance it. |

Conventional methodologies and "project logic"

The problematic trap of conventional "project logic" has been evoked in relation to the quest for patterns of coherence for future African management (Knowledge Gardening through Music, 2000; Metaphoric Entrapment in Time, 2000).

Question to ChatGPT: The challenge with the summary above is that global crises would seem to be engendered by the application of complex conventional methodologies -- of an ever more artificial nature -- requiring correspondingly artificial cognitive abilities. How these relate to psychosocial development of complementary human insights is yet to be fruitfully considered -- especially when the nature of those insights may themselves contribute to the crises.

You've raised a valid and complex point regarding the challenges posed by the application of complex, artificial methodologies and cognitive abilities in the context of global crises. The intersection between technological advancements, the application of artificial intelligence, and the psychosocial development of complementary human insights is indeed a critical and often contentious issue. Here are some key considerations:

The relationship between technological advancements and human insights in addressing global crises is indeed complex and multifaceted. It's crucial to recognize that both dimensions have unique contributions to make. Effective solutions often require a holistic approach that takes into account not only the technical aspects but also the ethical, social, and psychological dimensions of the challenges we face. Ultimately, the ongoing dialogue between the fields of technology, psychology, ethics, and social sciences is vital for navigating the complexities of the modern world and addressing global crises in a balanced and thoughtful manner. |

Ineffectual application of psychosocial insights?

A much-cited problematic insight has been offered by James Hillman and Michael Ventura (We've Had a Hundred Years of Psychotherapy – and the World's Getting Worse, 1992). Contrasting responses are evoked (Hans Rosling, et al, Factfulness: Ten Reasons We're Wrong About the World – and Why Things Are Better Than You Think, 2018; By Almost Every Metric, the World is Getting Better, Reddit, 2021; John Lande, Is the World Really Falling Apart, or Does It Just Feel That Way? The New York Times, 12 July 2022)

Question to ChatGPT: As noted by a much-cited study to the effect that after 100 years of psychosocial insight the world has gotten worse, there is clearly a disconnect between effective "psychosocial development", the possibility of "balancing technological and human insights", "interdisciplinary collaboration" and "education and ethical frameworks". The progressive dependence on essentially artificial cognitive abilities is then of questionable efficacy in relation to forestalling "unintended consequences"

You've raised a valid concern regarding the state of the world despite a century of psychosocial insight, as well as the potential disconnect between psychosocial development, technological advancements, interdisciplinary collaboration, and ethical frameworks. It's crucial to recognize that addressing complex global issues is an ongoing challenge, and there are no simple solutions. Several factors contribute to this situation:

The progressive dependence on artificial cognitive abilities does indeed come with risks and challenges, and it is essential to consider potential unintended consequences. Striking a balance between technological advancements and human insights is an ongoing process that requires constant vigilance and adaptation. While it's true that the world faces significant and pressing challenges, the alternative to addressing them through interdisciplinary approaches, ethical considerations, and the responsible use of technology is not likely to lead to improved outcomes. Rather, continued efforts to harness the potential of both technological and human insights, combined with a commitment to addressing unintended consequences and ethical concerns, are crucial in working towards a more sustainable and equitable future. |

Problematic regulation and oversight

Question to ChatGPT: You have emphasized that both "robust regulation" and "oversight" are necessary to mitigate potential dangers associated with human augmentation -- stressing that regulatory bodies should ensure the safe and ethical use of these technologies. Unfortunately the track record in that regard cannot be said to have reduced the emergence of highly problematic "unintended consequences". It is unclear whether AI will further exacerbate the situation -- with or without its envisaged regulation. Ironically problematic is the ambiguity of "oversight" in English, since it is also indicative of a blind spot and a matter "overlooked"

You've highlighted a valid concern regarding the effectiveness of regulation and oversight in mitigating the potential dangers associated with emerging technologies, including human augmentation and AI. The track record of regulation and oversight is indeed mixed, and it's true that some unintended consequences have arisen despite regulatory efforts. It's essential to acknowledge the challenges and limitations in this area:

Given these challenges, there's a need for ongoing refinement and adaptation of regulatory approaches to address the complexities of emerging technologies effectively. This includes enhancing transparency, engaging in public discourse, and considering a multidisciplinary approach that includes technical experts, ethicists, and social scientists. Regulation and oversight are not silver bullets, but they are essential components of responsible technology development. The question of whether AI will exacerbate these challenges or alleviate them depends on how regulatory frameworks are designed and implemented, as well as the extent to which collaboration and ethical considerations are prioritized. In essence, the evolution of technology and its regulatory landscape is a dynamic and ongoing process. It is essential to recognize the limitations while continuing to work towards regulatory approaches that mitigate risks, protect individuals and society, and foster the responsible and ethical development and use of emerging technologies. |

Question to ChatGPT: Could you regenerate the response to include issues of overregulation and oversight deliberately designed to avoid awareness of a potential issue

Certainly, it's important to acknowledge the potential issues of overregulation and the possibility of oversight that is designed to avoid awareness of certain problems. In the context of emerging technologies, including human augmentation and AI, these concerns can manifest in various ways:

These issues are significant and underscore the need for a balanced and well-considered approach to regulation and oversight. Effective regulation should strike a balance between fostering innovation and addressing risks and harms. It should also prioritize transparency, independence, and public engagement to avoid undue influence and the avoidance of awareness of potential problems. Achieving this balance requires ongoing vigilance and a commitment to the responsible and ethical development and use of emerging technologies. |

Remedial potential and dangers of cyborgization and transhumanism

Cyborgization: The argument has been made by Joshua Bleiberg that We Are All Cyborgs Now (Brookings, 8 October 2014), namely that conceptually people have started to transform into cyborgs. Reference is made to a point by Benjamin Wittes and Jane Chong (Our Cyborg Future: law and policy implications, Brookings, September 2014). The argument has been presented otherwise by Frank Swain:

My brain is no longer tuned to life without prosthetics. Without my hearing aids, I hear worse than I did before I got them. The little electronic plugs have become an extension of myself. Although I can be physically separated from my hearing aids – I can take them out and hold them in my hand – my sense of hearing is not so easily picked apart. It exists partly in my ears, and partly in my devices. So I am now a cyborg out of necessity, not choice. (Cyborgs: The truth about human augmentation, BBC, 24th September 2014).

As argued in a valuable summary by Anton L. Grinin and Leonid E. Grinin: Cyborgization is a hot topic these days. This is an intriguing process that is the subject of many futuristic novels and which at the same time takes place right before our eyes (Cyborgization: To Be or Not to Be? Journal of Big History , 4, 2020, 3). The authors are concerned about the question of whether the time will come when a human will mainly or completely consist not of biological, but of artificial material.

Becoming a cyborg in that way has been framed as a question by Jorge Pelegrín-Borondo, et al (Does Ethical Judgment Determine the Decision to Become a Cyborg? Influence of Ethical Judgment on the Cyborg Market, Journal of Business Ethics, 161, 2020, 2575)

Today, technological implants to increase innate human capabilities are already available on the market. Cyborgs, understood as healthy people who decide to integrate their bodies with insideable technology, are no longer science fiction, but fact. The cyborg market will be a huge new business with important consequences for both industry and society. More specifically, cyborg technologies are a unique product, with a potentially critical impact on the future of humanity. In light of the potential transformations involved in the creation of "superhuman" cyborgs, ethics must be a cornerstone of cyborg marketing decisions.

Dependence versus remedial potential? With respect to how artificial is human intelligence becoming, the emphasis on increasing dependence on enabling devices obscures any concern with the range of meanings to be associated with such enhancement -- whether in cognitive terms or in terms of global management capacity. Despite the suggestive title, the emphasis on dependence is characteristic of the study by Ivana Greguric (The Age of the Cyborg: philosophical issues of human cyborgization and the necessity of prolegomena on cyborg ethics, 2022).

Clearly a primary focus of cyborgization is on quantitative aspects -- increased speed and competence in quantitative terms, together with increased connectivity and interactivity. Such understandings of intelligence distract from the far less obvious question of qualitative enhancements, as might be indicated by the integration of insights from disparate domains characteristic of creativity of wider significance. Of considerable relevance, as noted above, is how these become evident with respect to the UN's planned Summit of the Future in 2024 -- in the light of the UN Secretary-General's vision for the future of global cooperation in the form of a report titled Our Common Agenda (2021).

Expressed otherwise, to what extent is cyborgization to be understood as indicative of significant enhancements of wisdom -- however that might come to be understood? Science fiction has occasionally suggested that this might be the case. The point can however be emphasized by reference to "cognitive fusion" as a requirement for the timely control of a fighter jet through integration of data from multiple indicators. This is necessarily distinct from any elusive understanding of wisdom acquired over years of experience. The design considerations are discussed separately by comparison with those of nuclear fusion (Dematerialization and Virtualization: comparison of nuclear fusion and cognitive fusion, 2006).

Given the importance incrreasingly accorded to "narratives", understanding of "cognitive fusion" merits consideration in relation to the integrative function of myth and symbols. As discussed separately, it is readily assumed in the modern world that myth and symbol are only to be associated with outmoded modes of cognition (Cognitive Fusion through Myth and Symbol Making: archetypal dimensions, 2006).

This is to forget the immense investments in "image making" through advertising, public relations and news management, or the importance attached to the symbols by which individuals, groups, teams, corporations, nations and international bodies are identified -- and through which they may well define their own identities. Great attention is given to the "stories" or "narratives" by which the identity of entities is crafted in relation to others. Their ability to "reinvent" themselves through developing new stories is appreciated. Symbols may then be embedded in a connective tissue of myth.

Such arguments frame the question as to whether the focus on cyborgization misleadingly equates enhancement of artificiality with enhancement of intelligence -- which may well be a narrative favoured by many. Aspects of that emphasis are discussed separately (Cyborgs, Legaborgs, Finaborgs, Mediborgs: meet the extraterrestrials - them is us, 2013).

Transhumanism: From that perspective, cyborgization may be readily conflated with transhumanism, namely the philosophical and intellectual movement which advocates the enhancement of the human condition by developing and making widely available sophisticated technologies that can greatly enhance longevity and cognition (Calvin Mercer, et al. Religion and Transhumanism: the unknown future of human enhancement, 2015; Nick Bostrom, A History of Transhumanist Thought, Journal of Evolution and Technology. 14, 2005, 1)

Following the success of AI against grand masters of chess and go, as yet to be demonstrated is whether and how artificial enhancements endow individuals (or collectives) with qualitative capacities most valued as characteristic of human intelligence. Whilst the use of drugs to this end may be sought and acclaimed by individuals, it is less evident -- and more controversial -- as to whether this is confirmed and appreciated from other perspectives (Alexander Beiner, The Bigger Picture: how psychedelics can help us make sense of the world, 2023; William Richards, Sacred Knowledge: psychedelics and religious experiences, 2015).

Humanity framed by implication of AI for religion and ethical systems?

Distinct religions: Of particular relevance to the nature of humanity are the arrays of categories and concepts engendered by the theologies and logics of various religions in which such constructs are central to cognitive augmentation as mystical experience. It is therefore surprising to note the extensive literature regarding AI from various religious perspectives:

- Buddhism: a separate entry on Buddhism and artificial intelligence is offered by Wikipedia:

- Soraj Hongladarom: What Buddhism can do for AI ethics (MIT Technology Review, 6 January 2021)

- Somparn Promta and Kenneth Einar Himma: Artificial intelligence in Buddhist perspective (Journal of Information, Communication and Ethics in Society, 6, 2008, 2)

- Jitendra Uttam: Between Buddhist ‘Self-Enlightenment’ and ‘Artificial Intelligence’ (Religions, 14, 2023, 2)

- Ross Nervig: What A.I. Means for Buddhism (Lion's Roar, 5 June 2023)

- Thomas Doctor and Brie Linkenhoker: What Buddhism Can Teach Us About Artificial Intelligence -- And Ourselves (Templeton World, 23 March 2023)

Of some relevance, for example, is the experimental development of Roshibot, namely an artificial intelligence powered chatbot trained to converse about Zen Buddhist practice. (Pat Pataranutaporn, et al: Buddha Bot: The Exploration of Embodied Spiritual Machine in Chatbot (Proceedings of the Future Technologies Conference (FTC), 2019) - Christianity:

- Kate Lucky: AI Will Shape Your Soul: bxut how is up to us (Christianity Today, 11 September 2023)

- Joe Carter: The FAQs: What Christians Should Know About Artificial Intelligence (The Gospel Coalition, 18 April 2019)

- Jonathan Merritt: Is AI a Threat to Christianity? (The Atlantic, 3 February 2017)

- Kate Ott: How should Christians respond to the challenges of AI? (U.S. Catholic, 7 July 2023)

- Annie Eisner: AI and the Future of the Church (Relevant, 27 June 2023)

- George M. Coghill: Artificial Intelligence (and Christianity): Who? What? Where? When? Why? and How? (Studies in Christian Ethics, 36, 2023, 3)

AI is being used to enable responses to questions on the basis of the Bible (Bible.ai) -- a biblical AI "made for Christians". Digital Bible is acclaimed as the first AI-powered Bible. - Hinduism:

- Mani Bhandari: AI and Hinduism: Navigating the Spiritual Frontier (AI Empower, 14 August 2023)

- Rohitash Chandra: AI, philosophy and religion: what machine learning can tell us about the Bhagavad Gita (The Conversation, 12 May 2022)

- God and robots: Will AI transform religion? (Hindusim Today, 26 October 2021)

An AI Hinduism app has been developed. - Islam:

- Sparsh Ahuja: Muslim scholars are working to reconcile Islam and AI (Wired, 25 January 2021)

- Ammar Awais: Islam on Artificial Intelligence (Islam Explained, 5 November 2022)

- Ali A. Z: A Philosophical Approach to Artificial Intelligence and Islamic Values (IIUM Engineering Journal, 12, 2011, 6)

- Islam and AI: revolutionizing Islamic knowledge sharing with cutting-edge technology (Medium, 28 March 2023)

- Judaism:

- Yehuda Shurpin: What Is the Jewish View on AI and Intellectual Property? (Chabad)

- Valley Beit Midrash: Staying Human: Judaism and Artificial Intelligence (MyJewishLearning)

- Rhona Trauvitch: From ancient Jewish texts to androids to AI, a just-right sequence of numbers or letters turns matter into meaning (The Conversation, 13: October 2023)

- Artificial Intelligence (AI) and the Jews (JewishWikippedia.inf)

- AI-Powered Discovery of the Beauty of Torah (AskJudaism.com)

- Artificial Intelligence: The Newest Revolution in Torah Study? (Jewish Action)

FrumGPT is a chatbot trained to respond to Jewish questions, as described by Louis Keene (What AI for the Yeshiva world reveals about Orthodox Judaism, Forward, 5 May 2023)

A form of chatbot has been developed -- Islam and AI -- providing an interface for users to access information regarding their queries about Islam using the knowledge of Quran. Considered a miracle of modern technology -- Islamic AI -- is an app providing an essential service for every Muslim and non-Muslim wishing to learn about Islam

Examples are cited of the controversial use of AI to develop sermons (most obviously in Christianity), and to perform rituals (in Hinduism). It can be readily imagined that AI will be used to enable people to convert between religions, as an extension of interactive facilities currently available. The emergence of new religions is envisaged (Neil McArthur, Gods in the Machine? The rise of artificial intelligence may result in new religions, The Conversation, 16 March 2023).

There is an obvious provocation to any proposed use of AI in support of mystical experience -- especially when the emphasis is on the constructs and not on the experience:

- Marcin Frąckiewicz: The Subtle Role of AI in Modern Day Mysticism (TS2.space, 9 September 2023)

- Tamlin Magee: This Mystical Book Was Co-Authored by a Disturbingly Realistic AI (Vice, 24 March 2022)

- Gregory Smith: Can Artificial Intelligence Understand Mysticism? (Patheos, 8 March 2023)

- Roberto Musa Giuliano: Echoes of myth and magic in the language of Artificial Intelligence (AI and Society, 35, 2020)

- Elias Kruger: The Mystical Qubit: How Quantum Computers Inspire Christian Spirituality (AITheology, 19 August 2021)

- Martin Ringle: Mysticism as a philosophy of artificial intelligence (Behavioral and Brain Sciences, 3, 1980, 3)

- AI and Mysticism: using technology to decode the secrets of traditional texts and art forms (AITroT, 8 July 2023)

Implication of AI for global ethics, interfaith reconciliation and interdisciplinarity

Secular values and ethics: gConsiderable importance is now attached to ethics in relation to the future safe development of AI. The difficulty in that respect is the continuing confision regarding ethics, especially in the light of the dubious legitimacy sought in "just war theory". The difficulty extends to what might be termed "just torture theory" and "just suffering theory", about which clarification might be sought from AI.

The more general difficulty is that ethics is problematiocally associated with human values about which there is further confusion -- despite frequent reference to them by leaders (Values, Virtues and Sins of a Viable Democratic Civilization, 2022). The United Nations has long remained a focus for the progressive articulation of human values -- dating from the Universal Declaration of Human Rights, variously replicated by regional organizations. The possibility of a global ethical framework remains elusive, despite the iniaitive of the Parliament of the World's Religions (Towards a Global Ethic: An Initial Declaration, 1993).

Less evident is how an ethical framework could then be elaborated for AI -- whether or not the assistance of AI is sought in its elaboration -- in the light of the many past initatives from which a coherent pattern of values has not clearly emerged (despite claims to the contrary):

- Iason Gabriel and Kevin McKee: How can we build human values into AI? (Google Deep Mind, 24 April 2023)

- Virginia Dignum: Responsible Artificial Intelligence: designing AI for human values (ITU Journal: ICT Discoveries, 1, 2017)

- Bernard Marr: The Dangers of not Aligning Artificial Intelligence ith Human Values (Forbes, 1 April 2022)

- Shengnan Han, et al: Aligning Artificial Intelligence with Human Values: reflections from a phenomenological perspective (AI and Society, 37, 2022)

- W. Ian O'Byrne: Aligning AI with Human Values and Interests: an ethical imperative (28 September 2023)

- Margit Sutrop: Challenges of Aligning Artificial Intelligence with Human Values (Acta Baltica Historiae et Philosophiae Scientiarum, 8, 2020, 2)

- Laurence Devillers, et al: AI and Human Values; inequalities, biases, fairness, nudge, and feedback loops (Reflections on Artificial Intelligence for Humanity, 2021)

- Jolene Creighton: How Can AI Systems Understand Human Values? (Future of Life, 14 August 2019

Theology: Of particular relevance is the articulation offered to one or more religions (or denominations) from a theological perspective, and how this may be enabled by AI. Without necessarily being able to distinguish the variety of religions encompassed, examples of such initiatives include:

- AI Theology

- Simeon Xu: Understanding AI from a Theological Perspective (Edinggburgh Futures Institute, 3 August 2023)

- Nick Spencer: Is artificial intelligence a threat to theology? (Church Times, 10 March 2023)

- Mark Graves: Generative AI for Theology and Science (AI and Faith, 23 June 2023)

- Andy Otto: Redefining Humanity: a theological perspective on AI (God In All Things, 27 March 2023)

- Lluís Oviedo: Artificial Intelligence and Theology: looking for a positive -- but not uncriticial -- reception (Zygon, 57, 2022, 4)

- David Maxwell: Artificial Intelligence: astonishing progress that raises theological questions (Concordia Theology, 29 June 2023)

- Peter Sherlock: Artificial Intelligence: a new horizon in theological education? (Vox)

- Tinku Thompson: Theology and AI (Efficient or Humanistic Society) (26 September 2023)

- Ben Geoffrey: AI and data-driven Christian Theology (5 July 2020)

Interfaith reconciliation? Missing from the focus on particular religions (whose unreconciled dogmas do so much to engender and justify violence) is the challenge of interfaith discourse and reconciliation, however this may be understood (or deprecated) from a theological perspective. Initiatives in that respect are as yet at an early stage:

- Marcin Frąckiewicz: The Role of AI in Interfaith Dialogue: facilitating conversations across belief systems (Artificial intelligence, 25 July 2023)

- Ken Chitwood: Reporting on AI and Faith: can new tech be a blessing or a curse? (Interfaith America, 17 July 2023)

- Robert Geraci: Decision-making and Public Policy at the Intersection of Religion and AI (G20 Interfaith Forum, 1 February 2022)

- Paolo Benanti: Faith in AI: A dialogue on ethics and technology (EU Policy Blog, 19 February 2021)

- Manfred Sing: Towards a Multi-Religious Topology of Islam: the global circulation of a mutable mobile (The Changing Landscapes of Cross-Faith Places and Practices, 9, 2019)

- Interfaith leaders join Vatican push for AI code of ethics (CathNews, 20 January 2023)

- Interfaith.ai: This facility is presented as "reinvigorating an age-old dialogue for our uncertain times" through the creation of the world’s most intuitive resource for faith and interfaith wisdom.

It is appropriate to suspect that individual religions will primarily make use of AI to reinfroce avoidance of fundamental interfaith challenges -- as in the case of Abrahamic religions.

Question to ChatGPT: A major strength of large language models is evident in their ability to select from a vast array of categories and offer systematic presentations of extensive scope. How might this capacity apply to the disparate theologies of a wide array of religions -- typically in some degree of conflict with one another?

Large language models like GPT-3 have the capacity to provide systematic presentations of information from a wide array of sources, which can be particularly useful when it comes to understanding and presenting information about theologies from various religions, even those in conflict with each other. Here's how their capacity can be applied in this context:

It's important to note that while large language models can be valuable tools in this context, they should be used carefully and ethically. Their output should be critically evaluated, and users should be aware of potential biases in the training data. Additionally, engaging with experts in theology and interfaith dialogue is crucial to ensure the accuracy and sensitivity of the information provided by these models. |

Question to ChatGPT: I have difficulty in seeing how these AI-enabled possibilities differ significantly from many decades of relatively ineffectual interfaith initiatives -- despite the expertise assembled -- as indicated by the continuing development of interfaith conflict.

You raise a valid point about the history of interfaith initiatives and the ongoing existence of interfaith conflicts despite these efforts. While AI-enabled possibilities may not represent a revolutionary change, they do offer some potential advantages and can complement traditional interfaith initiatives. It's essential to recognize that AI is not a panacea but can play a supportive role in addressing interfaith conflicts. Here's how AI-enabled possibilities may differ from previous initiatives:

However, it's important to acknowledge the limitations and challenges:

In summary, while AI can provide advantages in terms of scale, efficiency, and accessibility, it should be seen as a tool to support, not replace, human efforts in interfaith initiatives. Combining the strengths of AI with the expertise of human interfaith leaders and organizations can enhance the overall effectiveness of efforts to reduce conflicts and promote understanding among different religious groups. |

Question to ChatGPT: The points made are indeed reasonable but with the limitation that it seems unlikely that significant breakthroughs will emerge if AI is applied as you suggest. Missing for me is the comparable challenge with the array of academic disciplines and the limited efficacy of interdisciplinary collaboration. Potential key to both is what AI might enable in terms of engendering a meta-perspective through meta-categories, as implied by meta-logic, meta-system, meta-discipline and meta-theology

Your point about the need for a "meta-perspective" and the potential for AI to contribute to this perspective is insightful. The concept of meta-categories, meta-logic, meta-systems, meta-disciplines, and meta-theology could indeed be valuable in addressing complex, interdisciplinary challenges, including interfaith conflicts. Here's how AI might help in this regard:

While AI can facilitate these aspects of a meta-perspective, it's important to acknowledge that AI is a tool created and directed by humans. The development of a meta-perspective and the application of AI in this context should be guided by the following principles:

In conclusion, while AI can play a role in enabling a meta-perspective for addressing complex interdisciplinary and interfaith challenges, its use should be part of a broader, collaborative effort that involves human experts, stakeholders, and interdisciplinary approaches. AI can be a valuable tool for facilitating the identification of commonalities and shared insights across diverse domains, but it is most effective when combined with human wisdom and ethical considerations. |

Question to ChatGPT: Your precautionary response with regard to evaluation by experts unfortunately avoids a fundamental problem with experts, namely bias in their selection, and in their cognitive capacities. This is unfortunately well-framed by your reference to "human oversight" -- given that "oversight" is a synonym for "overlooking" and "blind spot". Missing for me with respect to "meta" is the sense in which this might be a perspective of higher dimensionality, requiring other techniques for its representation in relation to any subordinate array of categories of lower dimensionality.

You make a valid point about the potential for bias among experts and the limitations of human oversight. Bias in expert selection and cognitive capacities can indeed impact the effectiveness of any approach, including those involving AI. It's important to address these issues and explore alternative methods for achieving a higher-dimensional perspective, which transcends the limitations of any single discipline or viewpoint. A higher-dimensional perspective or "meta-perspective" could indeed require more advanced techniques for its representation and understanding. Here are some considerations for addressing these challenges:

It's important to recognize that achieving a higher-dimensional meta-perspective is an ongoing and complex endeavor. It requires the active involvement of experts, the application of advanced technologies, and the commitment to addressing potential biases and limitations. The goal is to create a more holistic and integrated understanding of complex topics, transcending the limitations of single-dimensional viewpoints. |

Question to ChatGPT: Whilst I appreciate the suggestions, missing for me is the track record of unresolved difficulty in reconciling contrasting perspectives with regard to their application. There is a degree of irony to the fact that both disciplines and religions are all effectively competing "large language models" (as yet unassisted by AI). Part of the human tragedy lies in the inability to elaborate a consensual system of ethics -- or to understand why it has proved impossible to engender and elicit support for it. It would seem that there is a need for AI to address opposition in systemic terms in a manner explored by oppositional logic (and the associated geometry)

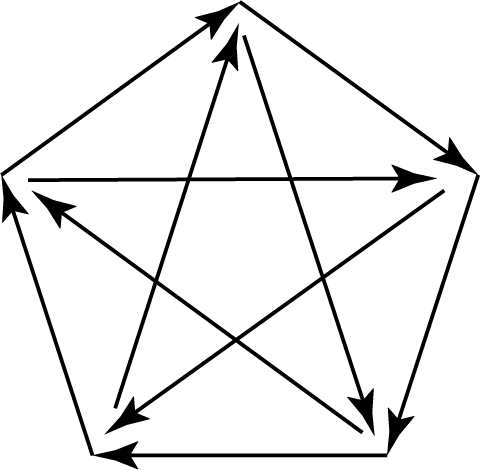

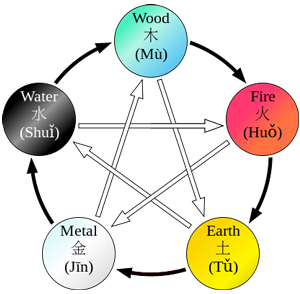

Your point about the unresolved difficulties in reconciling contrasting perspectives, whether in disciplines or religions, is indeed a significant challenge. The human capacity for competition, bias, and differing worldviews can hinder the establishment of a consensual system of ethics. Addressing these issues at a systemic level and exploring oppositional logic and geometry can be a valuable approach, and AI can potentially assist in this regard. Here are some considerations:

It's important to recognize that addressing the challenges of reconciling contrasting perspectives and fostering a consensual system of ethics is an ongoing process, and it may never lead to a completely homogeneous solution. However, AI can play a role in helping to identify and address systemic issues, reduce conflicts, and promote understanding among diverse groups. Additionally, human oversight and ethical considerations are essential in guiding the use of AI in these complex and sensitive areas. |

Artificiality of human constructs and disengagement of intelligence therefrom?

Whether in the light of the engendering of interdisciplinary, interfaith, or ethical frameworks, it is from this perspective that any recognized processes for "disengaging" cognitively from more concrete frameworks in favour of subtler frameworks merit consideration as "detachment" from what is more "artificial" -- from conceptual "artifacts".

Spiritual development? There is now speculation as to whether and how AI might enhance some form of spiritual experience and its development, whether integrative or otherwise:

- Bhavesh A. Kinkhabwala: Can Meditation Practices Be Elevated, for the Higher Level of Consciousness, Taking Help of Artificial Intelligence? (SSRN, 6 July 2020)

- Yehuda Hausman: Ethics, Judaism, and AI: Can ChatGPT Strengthen Your Judaism? (Jewish Journal, 16 February 2023)

- Roman Perkowski: Can AI be used for spiritual and meditative practices? (Artificial Intelligence, 16 September 2023)

- Nika Simovich Fisher: Generative AI Has Ushered In the Next Phase of Digital Spirituality (Wired, 5 October 2023)

- Duncan Borg Ellul: The Intersection of AI and Spirituality: exploring the possibility of AI interpreting the spiritual realm (Linkedin, 21 August 2023)

- Steve McIntosh: The Spiritual Significance of the Rise of AI (The Developmentalist, 8 June 2023)

- Gideon Kimbrell: How Artificial Intelligence Will Improve Our Spiritual Life (Observer, 9 Januaryr 2017)

- Kamal Bhattacharyya: Artificial Intelligence and Spirituality: the four domains of concern (Times of India, 1 December 2021)

- J. Nandakumar: Artificial Intelligence: Spiritualising Technology the only way out (Bharat Prakashan, 27 May 2023)

- Kimberly Kizer: Understanding the Relationship Between Artificial Intelligence, Human Spiritual Evolution, and the Limits of Machine Consciousness (Medium)

- Paul C. Vitz: Artificial Intelligence and Spiritual Life (The Ashbury Theological Journal, 44, 1989, 1)

AI might then be understood as performing a kind of "midwife function" for the future expression of "humanity" -- or even as a form of "guru" or spiritual counsellor.

Understanding human "unity" in diversity as a "shape"? Discourse readily implies an understanding of the "unity" of humanity, despite its diversity -- or becase of the rich variety thereby characterized (Hans Köchler, Unity in Diversity: the integrative approach to intercultural relations, UN Chronicle, 49, 2012, 3). In the face of crises, appeals are made to the sense of human unity and to the need for global coordination through a "unified" approach. Comprehending such implications, and giving expression to them, is clearly a challenge in practice.

The inspiration, and the challenge, is readily indicated by reference to the visible "universe" and the myriad visible stars. With the visible universe "wrapped" around the planet, it is less evident how a meaningful sense of "unity" is to be appropriately derived. Efforts can be made to express this through juggling an understanding of numbers, as in the proposed popular alternative to the Australian national anthem: “We are one, but we are many and from all the lands of earth we come. We’ll share one dream and sing with one voice”.

Religions are typically explicit in inferring and emphasizing the unity of their primary deity on which theologies variously comment. The relation between number and theology is evident in mathematical theology (Mathematical Theology: Future Science of Confidence in Belief, 2011). Given the many iconic mathematicians of religious inspiration, the perspective of number theory then invites mathematical speculation (Sarah Voss, What Number Is God?: Metaphors, Metaphysics, Metamathematics, and the Nature of Things, 1995).

A contrasting perspective is explored in cosmology with regard to the shape of the universe -- specifically whether it is flat, spherical or hyperbolic. This suggests a corresponding approach to the shape of the knowledge universe (Jordan Ellenberg, Shape: The Hidden Geometry of Information, Biology, Strategy, Democracy, and Everything Else, 2021). This is implied by the preoccupations of interfaith discourse, interdisciplinarity and ethics (Francis Miksa, The DDC, the Universe of Knowledge, and the Post-Modern Library, 1998; Clare Boyd-Macrae, Different beliefs are mere shades of grey in the universe of faith, The Age, 9 May 2020; George F. R. Ellis, A Universe of Ethics, Morality, and Hope, Proceedings of the Second Annual Goshen Conference on Religion and Science, 2003).

As might be expected, the topological possibility is framed from various theological perspectives -- typically emphsizing that any such shape is beyond human comprehension (and should be so):

- Barłomiej Skowron: The Explanatory Power of Topology in the Philosophy of God (2015)

- Daniel Schoch: Gods as Topological Invariants (arxiv, 1 April 2012)

- Barry M. Wright: Topology as an aid to Theological Formulation: a structure borrowed from mathematics (model, metaphor, curvature) (Northwestern University ProQuest Dissertations Publishing, 1984).

- Nathan L. Bostian: Theological Topology: placing the Trinity in reality (May 2020)

- Deep Bhattacharjee: The Striking Parallels between Ancient Hinduism and Modern Physics (Structures of Universe: Algebraic Topology, January 2021)

- Brittany E. Wilson: The Scriptural Shape of God: divine anthropomorphisms in synoptic perspective (New Testament Studies, 69, 2023, 2)

- S Prasannarajan: The Shape of God: an anatomical study of the Biblical God makes the divine intimately human (Open, 10 December 2021)

- William R. Schoedel: "Topological" Theology and some Monistic Tendencies in Gnosticism (Essays on the Nag Hammadi Texts in Honour of Alexander Böhlig, Brill, 1972)

- Topology in non-mathematical literature (MathOverflow, 2023)

- Does God have any shape? Is he different than we think? (Quora)

- What is the shape of Allah? (Quora)

- Does Allah have any physical form or shape? (The Pen Magazine, 5 December 2014)

- Does Allah have shape? (Islam Fact Check)

Religions variously evoke the nature of deity as a singularity and anticipation of a future manifestation of it in some form. An understanding of singularity is now evoked from a technological perspective and otherwise (Emerging Memetic Singularity in the Global Knowledge Society, 2009; Dynamics and Singularities in the Knowledge Universe, 2006). The challenge of describing the shape of a singularity is a theme of physics (Describing the shape of a singularity, Physics Stack Exchange, 2011).

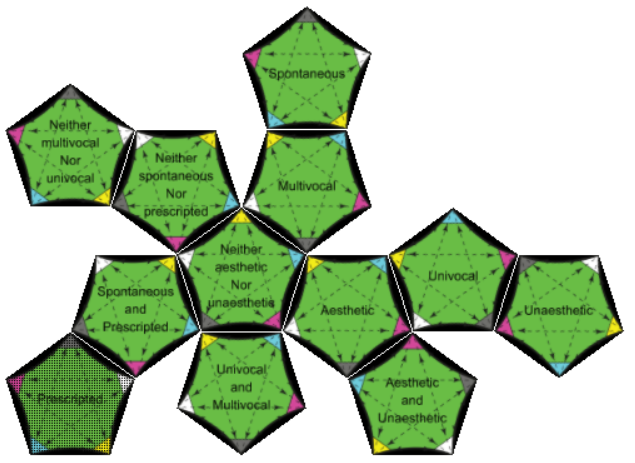

Question to ChatGPT: Your training would have covered a topic in cosmology: the "shape of the universe". Coherence with respect to interdisciplinarity or interfaith might then be explored through shape, geometry or topology -- rather than by number (as implied by "unifying" and its oversimplification). There are references to the "shape of God" in topology. A question would then be how complex would the topology need to be to hold insights of relevance to inter-faith "unity" or interdisciplinary "unity". A manifold of some kind? An orbifold? A shape necessarily beyond ordinary human comprehension?

Exploring the concepts of shape, geometry, and topology in the context of interdisciplinarity and interfaith unity is indeed an intriguing approach. The idea of the "shape of the universe" and its implications for understanding the interconnectedness of diverse fields of knowledge and belief systems can offer a unique perspective. Here are some thoughts on this concept:

It's important to note that this metaphorical approach should be used with sensitivity, as it involves complex theological and philosophical concepts. Additionally, it requires careful consideration of the cultural and religious implications of representing divine or sacred ideas in geometric or topological terms. In summary, the use of topology, geometry, and shape as metaphors for understanding interdisciplinarity and interfaith unity can offer a fresh perspective on these complex and multifaceted challenges. It acknowledges the richness and diversity of human knowledge and belief systems and emphasizes the need for a more holistic and interconnected approach. |

Question to ChatGPT: Framed in that way, the primary symbols of disparate religions could be understood as lower dimensional projections of facets of a complex higher dimensional shape. Presumably such a shape could be configured by AI -- such as to enable those primary symbols to be recognized as projections in 2D or 3D. Less evident is the case of interdisciplinarity, since disciplines are typically not associated with symbols, except in the case of classical Greek deities

Your suggestion of understanding primary symbols of disparate religions as lower-dimensional projections of facets of a complex higher-dimensional shape is a fascinating concept. It underscores the idea that different religious symbols may represent facets of a greater truth or understanding that transcends individual belief systems. While AI can play a role in modeling and representing such complex shapes, the challenge in interdisciplinarity is indeed different, as disciplines are not typically associated with readily recognizable symbols. Here are some thoughts on how this concept might apply to both religion and interdisciplinarity:

In interdisciplinary contexts, the challenge may lie in finding the common principles or ideas that unify diverse fields. While classical Greek deities provide a symbol for certain disciplines, the focus should be on identifying the deeper principles and values that connect various academic areas, rather than relying solely on symbolism. Ultimately, AI can play a role in representing and visualizing complex relationships, whether in religion or interdisciplinarity, to promote understanding, dialogue, and unity among diverse domains of knowledge and belief. |

Question to ChatGPT: The difficulties are clear in your framing. However is the same approach relevant to the "simpler" case of a "global ethic" -- of which various drafts have not evoked the expected uptake. Is it the case that the facets have been presented too simplistically (as lists) in the absence of insight into a more appropriately complex "shape"? Again the facets call for recognition as lower dimensional projections of a higher dimensional form. The many proposed 2D "projections" of the Earth as a sphere help to make the point.

The concept of a "global ethic" and its lack of expected uptake is an interesting case to consider. It's possible that one of the challenges in promoting a global ethic lies in the way it has been presented, often as lists of principles or guidelines. This approach may not fully capture the complexity and interconnectedness of ethical principles that a global ethic should encompass. Your suggestion of presenting these facets as lower-dimensional projections of a higher-dimensional form is a compelling one. Here's how this approach might be relevant to the concept of a global ethic:

The key challenge in promoting a global ethic is to convey its complexity and interconnectedness effectively. By using the analogy of multidimensional shapes and AI-driven visualizations, it becomes possible to create a more nuanced and adaptable framework for understanding and promoting a global ethic. This approach can encourage deeper engagement and uptake of ethical principles on a global scale. |

Arguably any sense of unity is -- to a degree -- a construct of the human mind, in the light of the case made by George Lakoff and Rafael Núñez. (Where Mathematics Comes From: how the embodied mind brings mathematics into being, 2000). It is then appropriate to ask whether there is a degree of correspondence between the sense of unity -- and its shape -- held to be characteristic of different domains (Theories of Correspondences -- and potential equivalences between them in correlative thinking, 2007).

Question to ChatGPT: Various international bodies have endeavoured to articulate ethical and value frameworks. These h cannot be said to have evoked widespread appeal or consensus in practice -- beyond tokenism. Major emphasis is now placed on the relevance of an ethical/value framework to guide development of AI -- although AI assistance has not been sought in the elaboration of such a framework. Given the possibility that such a framework might need to be of a higher order of complexity (than simple lists) to encompass the variety of ways in which it will necessarily be perceived, could you comment on how AI might assist in this process

AI can play a significant role in assisting the development of a higher-order, more nuanced, and comprehensive ethical and value framework for guiding the development of AI and addressing the challenges you've highlighted. Here's how AI can contribute to this process: